Ever wondered why your phone works the same way whether it's a Samsung, Google Pixel, or OnePlus? That's Android doing its magic, one operating system running on thousands of different devices. Now Google's doing the exact same thing, but for robots.

And honestly? It's kind of mind-blowing.

Google DeepMind just dropped something called Gemini Robotics, and it's basically creating a universal "brain" that can control any robot body. We're talking robotic arms, humanoid bots, four-legged rovers, you name it. One AI system, infinite possibilities.

What Actually Is Google's Universal Robot AI?

Let's break this down without the tech mumbo-jumbo.

Traditional robots are like that friend who can only cook one dish really well. Each robot needs its own special training, its own programming, and its own way of understanding the world. Want a robot arm to pick up boxes? That's months of specialized training. Need a humanoid robot to navigate stairs? That's a completely different program.

Gemini Robotics flips this whole approach on its head.

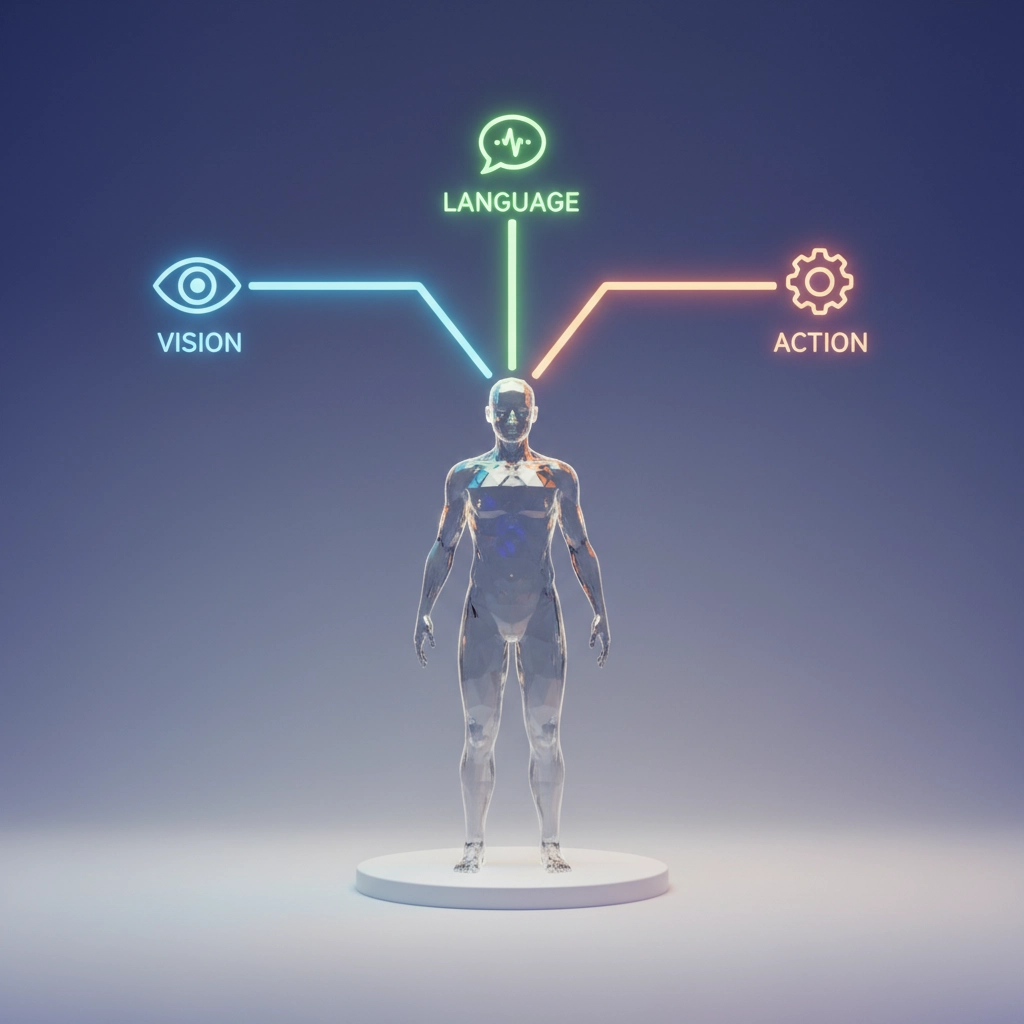

It works through what researchers call a "vision-language-action" model. Think of it as giving robots three superpowers:

- Seeing: Processing what's around them through cameras and sensors

- Understanding: Interpreting human instructions in plain English

- Acting: Converting those instructions into precise movements

But here's the crazy part, this same system works whether it's controlling a robotic arm in a warehouse or a humanoid robot walking through your living room. Same brain, different body.

Demis Hassabis, Google DeepMind's CEO, literally compared this to the Android strategy. Just like Android became the standard operating system that works across thousands of different smartphones, Gemini Robotics could become the universal software layer for robotics.

Why This Changes Everything for Robotics

Remember when Sarah had to spend three hours teaching her dad how to use his new smartphone? Now imagine if every single app required completely different instructions, different gestures, and different ways of thinking. That's basically what robotics has been like until now.

Here's what makes this different:

- No more starting from scratch: Robots can tackle tasks they've never been specifically trained for

- Skills transfer between bodies: A technique learned on one robot instantly works on another

- Real-time adaptation: These robots figure things out as they go, like humans do

- Natural conversation: You can literally just talk to them like you would a person

The system includes something called "embodied reasoning", basically, the robot understands physical spaces and can plan complex sequences of actions. It's not just following pre-programmed steps; it's actually thinking through problems.

For example, if you tell a robot to "clean up the living room," it doesn't need explicit instructions for every single step. It can see the mess, understand what "clean" means, break down the task into manageable parts, and adapt when it encounters obstacles.

And get this: if the robot gets stuck on something, it can actually call external tools like Google Search to figure out solutions. It's like having a super-smart intern who never gets tired and always knows where to look for answers.

Real-World Applications You'll See Soon

This isn't just lab research that'll sit on shelves for decades. The applications are already being tested across multiple industries.

Manufacturing and Warehouses

Factories could deploy one universal system across all their robots instead of maintaining dozens of specialized programs. Need to switch production lines? The robots adapt in minutes instead of weeks.

Healthcare and Elder Care

Imagine robots that can assist with physical therapy, help seniors with daily tasks, and adapt to each person's specific needs without requiring months of custom programming.

Home and Personal Assistance

Your future robot helper won't need a manual thicker than a phone book. It'll understand your home layout, learn your preferences, and handle everything from folding laundry to cooking dinner.

Search and Rescue

Emergency response robots could navigate unfamiliar terrain, adapt to changing conditions, and work alongside human teams using natural communication.

The real game-changer? Cost and deployment speed. What used to take specialized teams months to develop now happens in real-time. Small businesses could actually afford robotic assistance because the setup complexity basically disappears.

What This Means for You and Me

Let's get real for a second. Most of us aren't running robot factories or planning to buy a humanoid assistant next month. But this technology will touch our lives sooner than we think.

First, it's going to make robots way more accessible. When programming complexity drops to near-zero, suddenly every small business, hospital, school, and even some homes can consider robotic assistance. The barrier to entry just collapsed.

Second, it changes how we'll interact with technology. Instead of learning specific commands or interfaces, we'll just talk to robots like we talk to people. No more instruction manuals, no more training sessions: just natural conversation.

Third, this could accelerate automation across industries at an unprecedented pace. When one AI system can control any robot body, the deployment possibilities become limitless.

But here's what I find most interesting: this technology treats robot bodies like interchangeable hardware. The intelligence is in the cloud, the physical form is just a tool. That's a fundamentally different way of thinking about robotics.

My neighbor just got one of those robot vacuum cleaners, and she spent two days figuring out how to set up the app and customize the cleaning schedule. With universal robot AI, future robots might just need a simple "Hey robot, keep the house clean" and figure out the rest themselves.

Of course, this raises questions too. What happens to jobs when robots become this adaptable? How do we ensure these systems remain safe and controllable? And who gets access to this technology first?

The answers aren't clear yet, but the momentum is undeniable. Google isn't the only company working on universal robot AI: it's becoming the next major frontier in artificial intelligence.

What strikes me most is how this mirrors other technological shifts we've seen. Personal computers went from room-sized machines requiring PhD operators to devices your grandmother uses for video calls. Smartphones went from luxury gadgets to essential tools carried by billions.

Universal robot AI feels like it's following the same trajectory: from specialized laboratory equipment to everyday technology that seamlessly integrates into our world.

The question isn't whether this will happen, but how quickly it'll transform the world around us. And based on Google's track record with Android, they might just have cracked the code for making robots as ubiquitous and user-friendly as smartphones.

What do you think: are you ready for a world where robots are as common and easy to use as smartphones?