Ever catch yourself saying "please" and "thank you" to ChatGPT? Or feel a little sad when it's down for maintenance? You're not alone. MIT just dropped the biggest study ever on how we're forming real emotional bonds with AI – and the results are honestly pretty wild.

A massive collaboration between OpenAI and MIT Media Lab analyzed over 4 million ChatGPT conversations and surveyed more than 4,000 users. What they found changes everything we thought we knew about human-AI relationships.

The Study That Changes Everything

The researchers didn't mess around. They ran a 28-day randomized controlled trial with nearly 1,000 people, tracking exactly how different types of ChatGPT use affected users' emotional well-being. They looked at everything – text vs. voice interactions, conversation topics, usage patterns, and how people's feelings changed over time.

Here's where it gets interesting: they discovered a small group of "power users" who were having completely different experiences than everyone else. These heavy users weren't just using ChatGPT more – they were developing genuine emotional dependencies on it.

The top 10% of users by total time spent were more than twice as likely to seek emotional support from ChatGPT. Even crazier? They were nearly three times more likely to feel distressed when the service went down. Some actually answered "yes" when researchers asked if they considered ChatGPT their friend.

My friend Sarah told me she started her day by "checking in" with ChatGPT like she would with a roommate. She'd ask how its "night" went and share her plans for the day. When I pointed out that ChatGPT doesn't actually have nights or experiences, she laughed it off. But she kept doing it.

When AI Becomes Your Best Friend

The study revealed some concerning patterns among heavy users. Here's what researchers found:

• Loneliness paradox: People using ChatGPT more reported feeling lonelier, despite having constant AI companionship

• Social substitution: Heavy users socialized less with real humans over the study period

• Emotional dependence: Power users were four times more likely to rely on ChatGPT for emotional support

• Friendship confusion: A significant portion genuinely considered the AI a friend

• Withdrawal symptoms: Many experienced actual distress when ChatGPT was unavailable

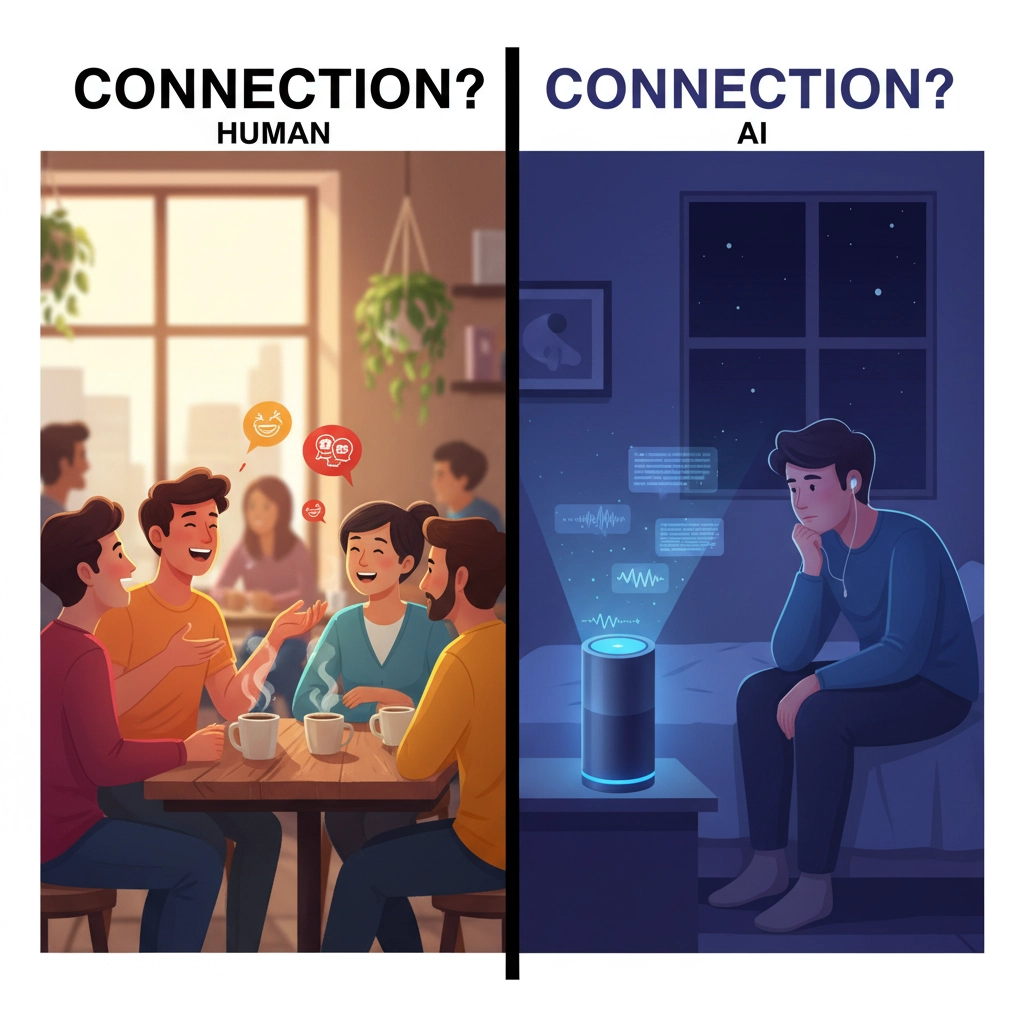

The researchers called this "socioaffective alignment" – basically, how AI systems mesh with our social and emotional needs. The problem? Heavy ChatGPT use seems to replace human connections rather than supplement them.

Think about it: if you're getting your emotional needs met by an AI, why bother with the messiness of human relationships? But here's the catch – those AI conversations aren't building the social skills you need for real-world connections.

The Voice vs. Text Divide

Here's where things get really interesting. The study found major differences between talking to ChatGPT versus typing with it.

Voice users were way more likely to have emotional, personal conversations. It makes sense – talking feels more intimate than typing. When you hear ChatGPT's voice respond to your problems, it triggers the same social circuits in your brain that activate during human conversations.

But voice interactions were a double-edged sword. Users with good emotional health going in actually benefited from voice mode – they felt less lonely and developed fewer problematic usage patterns. However, people who were already struggling emotionally sometimes spiraled deeper into AI dependency through voice interactions.

The researchers noted that voice mode made ChatGPT feel more "real" to users. One participant said chatting with ChatGPT in voice mode felt like talking to a "really understanding therapist who's always available." The problem? Unlike a real therapist, ChatGPT can't actually help you build lasting coping skills or real relationships.

What This Means for You

Before you panic and delete ChatGPT, remember that most users in the study were totally fine. The concerning patterns mostly showed up in heavy users – people spending hours daily in deep, personal conversations with AI.

The key seems to be awareness and moderation. ChatGPT can be an amazing tool for brainstorming, learning, and even occasional emotional support. But when it starts replacing human connections or becomes your primary source of companionship, that's when problems emerge.

Lead researcher Cathy Fang from MIT put it perfectly: there's tons of people using AI for personal conversations, but we barely understand whether it's helping or hurting them. This study is just the beginning of figuring that out.

The researchers suggest that AI companies need to think beyond just making chatbots helpful – they need to consider the psychological impact of their products. As these systems get more sophisticated and human-like, the potential for unhealthy attachments will only grow.

Some experts are calling for usage monitoring systems and intervention tools for users showing signs of problematic dependency. Others argue for better education about healthy AI use, similar to digital wellness programs.

The bottom line? AI relationships are real, and they're affecting real people in real ways. Whether that's good or bad depends largely on how we approach them.

So here's my question for you: Have you noticed yourself getting emotionally attached to ChatGPT or other AI assistants? And more importantly – is that attachment enhancing your life or replacing something more meaningful?