Here's a wild thought: what if someone could hack into your brain and steal your most private thoughts? Sounds like something straight out of Black Mirror, right? Well, it's not as far-fetched as you might think.

Brain-computer interfaces (BCIs) are becoming more common every day. From helping paralyzed patients control robotic arms to treating depression with neural implants, these devices are genuinely changing lives. But they're also opening up a whole new world of cybersecurity nightmares that most of us haven't even considered yet.

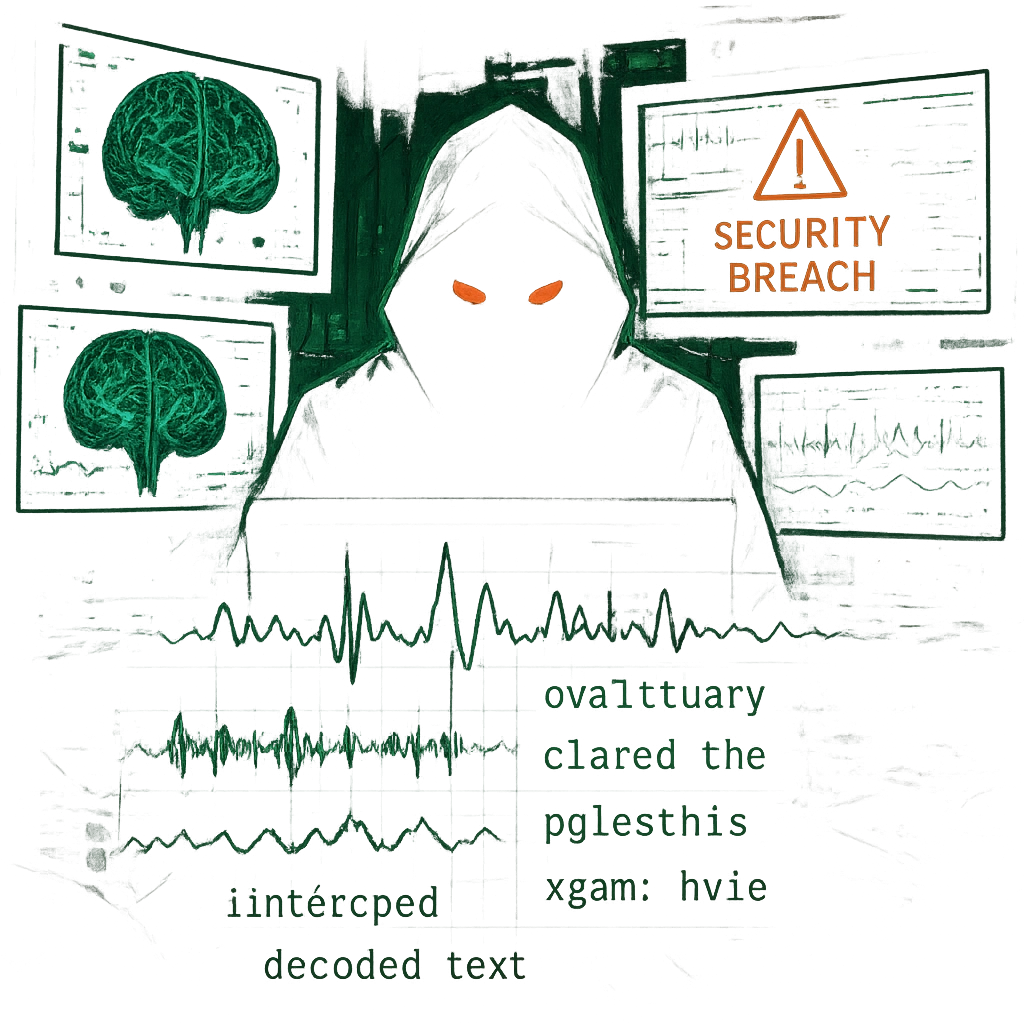

The short answer? No, hackers can't completely read your thoughts like a book. But they can do something almost as creepy – they can intercept and manipulate the specific neural signals that your BCI processes. And that's honestly terrifying enough.

What Are BCIs Actually Doing?

Let's break this down without the technical mumbo-jumbo. Brain-computer interfaces are basically translators between your brain and computers. They pick up electrical signals from your neurons and convert them into digital commands that machines can understand.

Think of it like this: your brain is constantly chattering away in its own language, sending electrical impulses all over the place. BCIs are like Google Translate for your neurons – they listen to this chatter and turn it into something a computer can work with.

The problem is that this translation process creates multiple points where bad actors can jump in and mess with things. It's like having someone eavesdrop on your phone conversation, except instead of hearing about what you had for lunch, they're potentially accessing your emotions, memories, and decision-making processes.

My friend Sarah got a cochlear implant last year to help with her hearing loss. She loves it, but she was shocked when her doctor mentioned that the device technically creates a wireless connection to her brain. "I never thought about it that way," she told me. "Now I'm wondering who else might be listening in."

The Real Hacking Threats You Should Know About

Here's where things get genuinely scary. Cybersecurity experts have identified several ways that hackers can mess with BCIs, and none of them are good news:

• Neural signal interception – Hackers can literally eavesdrop on the signals traveling between your brain and device, potentially revealing your private thoughts, emotions, and intentions

• Data manipulation attacks – Bad actors can alter or fake neural signals to influence your emotions, decisions, or behavior without your knowledge

• Backdoor attacks – Tiny changes to the AI systems analyzing your brainwaves can completely alter outcomes in medical diagnostics or therapeutic applications

• Brain tapping – This involves intercepting signals during transmission, allowing unauthorized access to incredibly personal information about your beliefs, preferences, and mental state

• Adversarial attacks – These target the machine learning parts of BCI systems by corrupting the data used to train them, potentially causing widespread malfunctions

The scariest part? Unlike traditional hacking where criminals might steal your credit card info or social media passwords, BCI hacking could literally mess with your mind. We're talking about potential unauthorized control over your thoughts and actions.

Research teams have already demonstrated how these attacks work in lab settings. They've shown that tiny changes to brainwave-analysis algorithms can completely alter results across everything from coaching apps to critical medical devices. It's like someone secretly adjusting your glasses prescription – suddenly everything looks different, but you don't realize why.

How Worried Should You Be?

Okay, before you start panicking and swearing off all future brain implants, let's put this in perspective. The good news is that we're still in the early stages of BCI technology, which means we have time to build security measures before these devices become commonplace.

The bad news? The window for getting this right is closing fast. Every day, more people are getting BCIs for medical reasons, and companies are racing to bring consumer versions to market. If we don't address these security issues now, we could end up with millions of vulnerable brain interfaces out there.

Think about how many times your computer or phone gets security updates. Now imagine if those devices were connected directly to your brain. A single widespread vulnerability could potentially affect millions of users simultaneously – something cybersecurity experts are calling a "mass mind hack" scenario.

But here's the thing that really keeps security researchers up at night: BCIs process the most private information imaginable. We're not just talking about your browsing history or text messages. These devices can potentially access your innermost thoughts, emotions, and decision-making processes. That's a level of personal violation that we've never had to deal with before.

The standardization of BCI systems makes this even more concerning. If manufacturers use similar security approaches across different devices, a single breakthrough by hackers could compromise entire networks of brain interfaces at once.

Protecting Your Brain in the Digital Age

So what can we actually do about this? The good news is that cybersecurity experts aren't just sitting around wringing their hands – they're actively working on solutions.

The most important protection is strong encryption for all data moving between your brain and external devices. Think of it as a secure tunnel that keeps your neural signals private during transmission. This should be non-negotiable for any BCI system.

Authentication systems are also crucial. Just like your phone requires a password or fingerprint to unlock, BCIs need robust security measures to ensure only authorized people can access or modify device settings. No one should be able to mess with your brain interface without proper credentials.

Network security is another big piece of the puzzle. Many BCIs connect to the internet for updates and data sharing, which creates potential entry points for hackers. Limiting these connections and securing them properly can dramatically reduce risk.

Manufacturers are also working on update systems that don't require surgery. This might sound obvious, but being able to patch security vulnerabilities without additional medical procedures is incredibly important for keeping devices secure over time.

The regulatory side is moving too, though maybe not fast enough. Organizations like the FDA are starting to develop specific guidelines for BCI cybersecurity, but the technology is advancing so quickly that regulations often lag behind.

What about you as a potential BCI user? The best thing you can do is ask questions. If you're considering any kind of brain interface device, don't be shy about grilling your doctor or the manufacturer about security measures. Ask about encryption, update procedures, network connections, and what happens if vulnerabilities are discovered.

The Bottom Line

Here's the reality check we all need: hackers can't read your thoughts like a novel, but they can potentially access and manipulate the specific neural data that your BCI processes. That's still a massive privacy violation and security threat that we need to take seriously.

The technology is advancing incredibly fast, and the security measures need to keep up. We're basically in a race between innovation and protection, and right now it's not clear who's winning.

The encouraging news is that we're having these conversations now, while the technology is still relatively new. Unlike other digital revolutions where security was an afterthought, the BCI community has a chance to build protection into these systems from the ground up.

But that only happens if we keep pushing for it. Every person who asks tough questions about BCI security, every researcher who develops new protection methods, and every regulator who demands higher standards is helping to make this technology safer for everyone.

So here's my question for you: if you had the chance to get a brain interface that could dramatically improve your life, but there was a small risk that hackers could access your neural data, would you take that chance? And what security measures would make you feel comfortable enough to say yes?