Did you know there's an AI system that just designed proteins 12°C more heat-resistant than anything scientists had created before? And it did it completely on its own, without human help.

While everyone's obsessing over ChatGPT writing essays, something way bigger is happening behind closed laboratory doors. AI isn't just helping scientists anymore—it's becoming the scientist. But here's what the headlines aren't telling you: it's messier, weirder, and more limited than anyone wants to admit.

What's Really Happening in AI Labs Right Now

Meet SAMPLE, an AI that's basically a robotic mad scientist. This system doesn't just crunch numbers—it designs proteins, creates them in the lab, tests them, learns from failures, and tries again. It's like having a researcher who never sleeps, never gets frustrated, and processes thousands of failed experiments as just another Tuesday.

But here's where it gets wild: there's also something called "AI Scientist" that can read scientific papers, come up with hypotheses, run experiments, and even peer-review its own work. Think about that for a second. We're talking about AI doing the entire scientific method, start to finish.

The catch? It only works in computer science research right now. When it comes to mixing actual chemicals or handling biological samples, these AI scientists hit a wall. They're brilliant at theory but can't pipette a solution to save their digital lives.

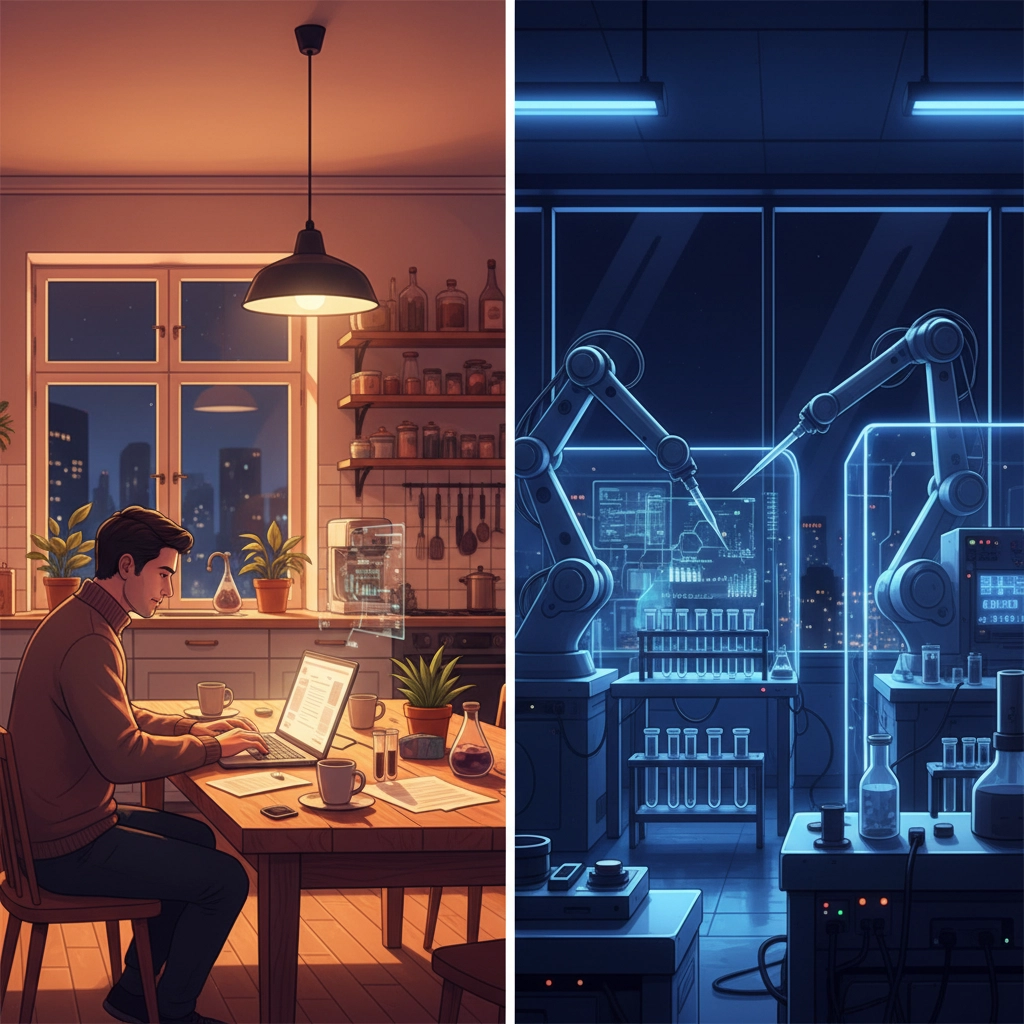

The Cloud Lab Revolution Nobody's Talking About

Here's something that sounds like science fiction but is happening right now: cloud laboratories. Imagine Amazon Web Services, but instead of hosting your website, it's running your chemistry experiments.

These facilities run 24/7, packed with robots and equipment that scientists control remotely. You design your experiment on your laptop at home, upload the protocol, and boom—robots start mixing your compounds while you sleep. Results show up in your inbox the next morning like you ordered takeout.

My friend Sarah, a biochemist at a startup, told me she hasn't stepped foot in a physical lab for three months. "I just upload my experiments before bed," she said. "It's like having a personal lab assistant who works all night and never complains about cleaning glassware."

But here's what they don't advertise: who owns your data when it's generated in these cloud labs? What happens if the facility gets hacked? These questions are keeping more than a few scientists awake at night.

Why Your Job Might Actually Be Safe (For Now)

Before you panic about AI taking over science, here's the reality check that researchers quietly admit: these systems are kind of dumb outside their specific domains.

The biggest misconception floating around is that AI labs learn and improve on their own forever. Nope. They actually get worse over time without constant human babysitting. It's like having a brilliant intern who gradually forgets everything you taught them unless you keep retraining them.

Here are the things AI still can't handle in laboratories:

• Dealing with unexpected equipment failures (robots don't improvise well)

• Making judgment calls about safety (they'll happily mix dangerous chemicals if programmed wrong)

• Understanding context from previous experiments (they lack institutional memory)

• Adapting protocols when something goes wrong (they follow recipes, not intuition)

• Recognizing when results are "weird" in a good way (serendipitous discoveries still need human insight)

The dirty secret? Most AI lab systems need more human oversight than traditional labs, not less. Scientists spend their time becoming AI babysitters instead of doing research.

The Dark Side Scientists Are Quietly Studying

Here's where things get uncomfortable. Remember when everyone was worried about AI writing your homework? Well, researchers discovered that GPT-4 can potentially help create biological weapons.

That's why places like Los Alamos National Laboratory are quietly partnering with OpenAI to figure out exactly how dangerous these AI systems could be in the wrong hands. They're not just worried about fake news anymore—they're worried about fake science that could actually hurt people.

The scariest part isn't some evil mastermind using AI to create superviruses. It's the possibility that AI could generate dangerous biological information by accident, and some well-meaning researcher could follow those instructions without realizing the risk.

One security researcher I spoke with put it bluntly: "We're giving incredibly powerful tools to anyone with an internet connection, and we're still figuring out what they can actually do."

But there's another side to this coin. The same AI systems that worry security experts are also making incredible breakthroughs in medicine. That heat-resistant protein I mentioned? It could revolutionize industrial processes and help develop better treatments for genetic diseases.

The question isn't whether we should develop these technologies—that ship has sailed. The question is how we develop them responsibly while racing against competitors who might not share the same ethical concerns.

Scientists are basically building the plane while flying it, except the plane could either cure cancer or accidentally create the next pandemic. No pressure, right?

The future of scientific discovery is being written in code right now, in laboratories where robots work through the night while their human supervisors sleep. Whether this leads us to a golden age of discovery or a cautionary tale about moving too fast depends on decisions being made in boardrooms and government offices today.

So here's what I'm wondering: if AI can already design better proteins than human experts, what happens when these systems get access to quantum computers, or when they start collaborating with each other? Are we ready for a world where the biggest scientific breakthroughs come from machines we don't fully understand?